Our Blogs

Data Fundamentals Part-1: Data lake

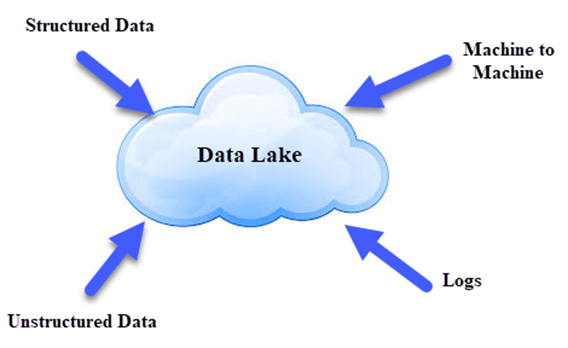

Simply Data lake is central place to store all kind & huge in amount of data from various sources.

Re-platforming and other drivers for data lake architecture evolution take various forms.

Data lakes started on Hadoop but are migrating elsewhere:

In fact, the earliest data lakes were almost exclusively on Hadoop. The current wave of dissatisfaction with Hadoop is driving a number of lake migrations off of Hadoop. For example, after living with their lakes for a year or more, many users discover that key use cases demand more and better relational functionality than can be retrofitted onto Hadoop. In a related trend, many organizations proved the value of data lakes on premises and are now migrating to cloud data platforms for their relational functionality, elasticity, minimal administration, and cost control.

A modern data lake must serve a wider range of users and their needs:

The first users of data lakes were mostly data scientists and data analysts who program algorithm-based analytics for data mining, statistics, clustering, and machine learning. As lakes have become more multitenant (serving more user types and use cases), set-based analytics (reporting at scale, broad data exploration, self-service queries, and data prep) has arisen as a requirement for the lake -- and that requires a relational database.

Cloud has recently become the preferred platform for data-driven applications:

Cloud is no longer just for operational applications. Many TDWI clients first proved the value of cloud as a general computing platform by adopting or upgrading to cloud-based applications deployed in the software-as-a-service (SaaS) model. Data warehousing, data lakes, reporting, and analytics are now aggressively adopting or migrating to cloud tools and data platforms. This is a normal maturity life cycle -- many new technologies are first adopted for operational applications, then for data-driven, analytics applications.

New cloud data platforms are now fully proven:

The early adoption phase is over, spurring a rush of migrations to them for all kinds of data sets. As mentioned earlier, cloud data warehouses and other data platforms have the relational functionality that users need. In addition, they support the push-down execution of custom programming in Java, R, and Python. Early adopters have corroborated that the platforms perform and scale elastically, as advertised, while maintaining high availability and tight security. This gives more organizations the confidence they need to make their own commitments to cloud data platforms.

User best practices for data lakes are far more sophisticated today:

Early data lakes suffered abusive practices such as data dumping, neglect of data standards, and a disregard for compliance. Over time, lake users have corrected these poor practices. Furthermore, users have realized that the data lake -- like any enterprise data set -- benefits from more structure, quality, curation, and governance.

The catch is to make these improvements in moderation without harming the spirit of the data lake as a repository for massive volumes of raw source data fit for broad exploration, discovery, and many analytics approaches. It's a bit of a balancing act, but data lake best practices are now established for maintaining detailed source data for discovery analytics while also providing cleansed and lightly standardized data for set-based analytics.

Next: Data Fundamentals Part-2: Data lake House